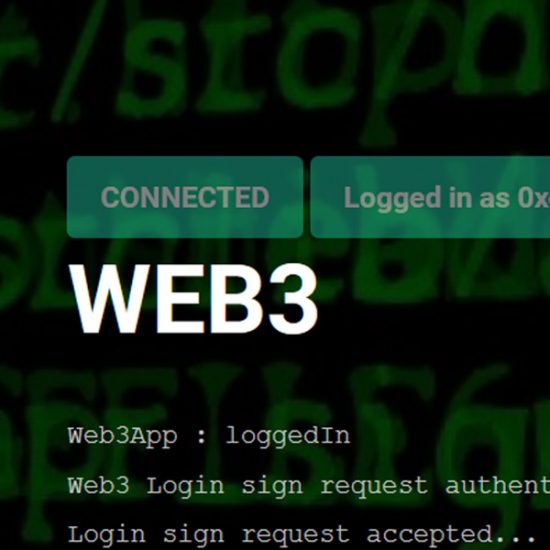

WEB3 is becoming the buzzword of the year – if you don’t know exactly what it is join the club! Suffice to say that WEB3 will decentralise the Internet enabling…Continue reading

WEB3 is becoming the buzzword of the year – if you don’t know exactly what it is join the club! Suffice to say that WEB3 will decentralise the Internet enabling…Continue reading

Varnish and Magento 2 go together like Strawberries and Cream – you just cannot have one without the other. Recently I got really confused about the correct way to configure…Continue reading

I spent many hours recently trying to figure out why a custom Magento 2 customer registration attribute was not working only to find that a relatively simple mistake was the…Continue reading

TL;DR Migrating the Magento 2 catalog search engine to Smile ElasticSuite will resolve pretty much all the issues you might be experiencing with Magento 2 native ElasticSearch catalog search so…Continue reading

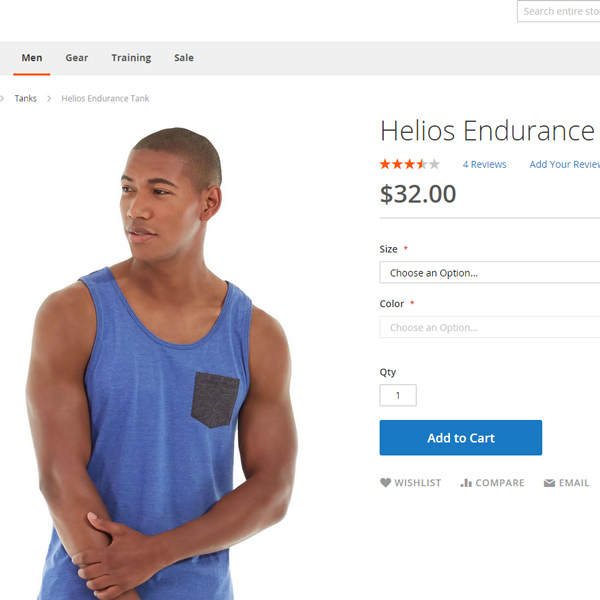

Configurable products have changed a lot in Magento 2. Compared to Magento 1, Magento 2 configurable products are just containers for simple products (variations). You can no longer configure pricing…Continue reading

I was working on my procedures for applying updates to a production Magento 2 site recently and decided it was a pretty good idea to put Magento into maintenance mode…Continue reading

Magento 2 PageSpeed (Lighthouse) performance audit results for mobile and desktop are notoriously bad. Imagine you have worked for months on a new Magento 2 eCommerce store, followed best practices…Continue reading

Gmail lets you Send emails from a different email address or alias so that If you use other email addresses or providers, you can send email from that address via…Continue reading

I am Migrating Magento 1.9 to 2.3 – yikes! I wasn’t able to use the Magento 2 migration tool for products so one of the tasks on my TO DO…Continue reading