This post was last updated 6 years 9 months 11 days ago, some of the information contained here may no longer be actual and any referenced software versions may have been updated!

This post was last updated 6 years 9 months 11 days ago, some of the information contained here may no longer be actual and any referenced software versions may have been updated!Whilst building containers for Magento 2 I came across the docker compose scale command which allows you to easily duplicate and scale containers running a service in Docker.

For example if I have a web application service called php-apache

docker-compose scale php-apache=10

Will start another 9 containers running the php-apache service giving me 10 containers running the same php-apache web application service.

Of course if you want to have real resilience and load sharing Docker swarm is the solution so the question is, are there any benefits from scaling a service in mulitple containers on a single host?

Well, yes, because you can still load balance the traffic to your application and achieve a basic level of container resilience.

Scaled services will only work for applications that use private ports as multiple Docker containers cannot share the same public port. I use an NGINX service container as a reverse proxy to my web application services with NGINX listening on the host public http port, proxying traffic to the service containers on their private internal container http ports.

This scenario can scale easily and there are some documented examples that describe how to load balance Docker scaled web application services, however all the examples I found require manual configuration and a restart of the load balancer to update it when service containers are scaled up or down.

I wanted to create a dynamic scaled service where the load balancer or proxy is dynamically updated when services are scaled up or down.

NGINX as a reverse proxy will also load balance when multiple upstream hosts are configured for a website but the default configuration file must be manually edited, saved and NGINX restarted before the changes take affect.

A bit of googling around uncovered a module that allows you to dynamically update the NGINX upstream host configuration. I compiled the module into my existing NGINX service container and tested it and was able to dynamically add and remove upstream hosts, perfect for dynamically scaling Docker services!

Scaledemo Application

To demonstrate how Docker Mono Host Service Scaling and Dynamic Load Balancing with NGINX works I put together a group of containers to simulate a scaled web application :

- manager

- A php service container that acts as the manager for the scaled services project.

- nginx

- An NGINX container compiled with the ngx_http_dyups dynamic upstream module.

- php-apache

- A php7, Apache2 service container that will run my demo ‘Bean Counter’ web application.

- memcached

- Cache server used by the manager to store Docker data, and by the web application to store the ‘Bean Counter’ data.

Manager

Dynamic updates to NGINX are carried out by the manager service container. It pulls Docker system information from the Docker host via a cron job every 60 seconds, parses the data for running service containers that match the configured project web application service container (php-apache) and generates an NGINX upstream host configuration.

The manager compares the upstream host configuration running on NGINX with the generated configuration from Docker. If they differ it updates NGINX via php-curl with the new configuration. The parsed manager Docker data is stored on the memcache server so that it is available to other services.

When I scale the php-apache service up or down NGINX is dynamically configured with the changes at the next manager update, or heartbeat. I can also manually force the update via a cli command on the manager.

Demo

Watch the video below to see the scale demo in action.

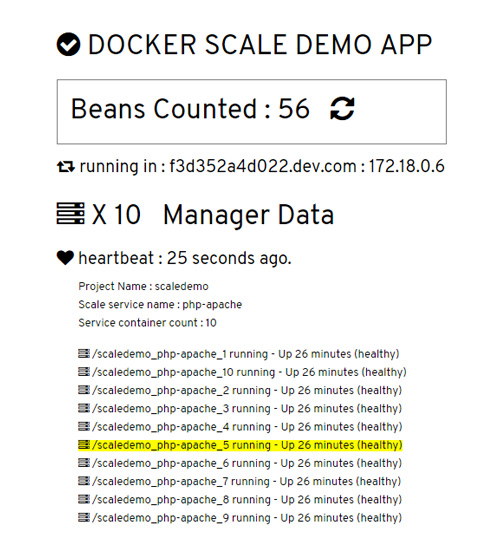

In the video I start the containers with docker compose, scale php-apache up to 10 instances and check the dynamic update and round robin load balancing via the demo web application that parses the manager data from memcache and also increments a counter.

I then scale it up again to 20 instances and manually update the nginx configuration via the manager. You can see NGINX load balancing the web application with round robin requests to each of the 20 service containers as I click the refresh button to increment the bean counter and reload the page. The container that served the page is highlighted in yellow.

Finally I scale the service back down to 2 instances and stop the containers.

You can build the Docker containers and try out the demo yourself using the docker-compose files from GitHub.

- Clone the project files

- git clone https://github.com/gaiterjones/docker-scaledemo

- Build the containers

- cd scaledemo

- docker-compose build

- Make sure there are no other service using port 80 on your host and start the containers

- docker-compose up -d

- Point your browser at http://scaledemo.dev.com (which should resolve to your Docker host) to see the demo page

- Scale the php-apache container

- docker-compose scale php-apache=5

To manually update NGINX from the scale manager

- Connect to the manager container

- docker exec -t -i scaledemo_manager_1 /bin/bash

- or docker exec magento2_manager_1 php cron.php scalemanager

- docker exec -t -i scaledemo_manager_1 /bin/bash

- run the scalemanager php script

- php cron.php update scalemanager

Comments